Augmentic BV

Haaswijkweg east 12B

3319 GC Dordrecht

The Netherlands

Augmentic BV

Haaswijkweg east 12B

3319 GC Dordrecht

The Netherlands

The Stanford study shows that people do not want to use AI everywhere: they consciously choose which tasks they want to share with technology. Not everything that can be done, needs to be done. This confirms Augmentic's vision: AI agents should support work, not take over. This is how we are building smarter, more humane workdays with Augmentic OS.

In discussions about AI at work, two extremes often dominate: on the one hand, the fear that technology will make people redundant, on the other, the promise of complete relief. What is often missing from this polarization is the voice of the people themselves: the employee who is already noticing that AI is intruding into their tasks, processes and rhythms.

A recent study from Stanford University offers exactly that perspective. No futuristic predictions or theoretical models, but an in-depth survey of what people themselves want - and don't want - when it comes to deploying AI agents at work. The results are surprisingly practical. And they offer a much-needed mirror, especially now that many organizations are blinded by the possibilities of technology without asking themselves whether it is in the service of people.

Stanford asked 1,500 people from more than 100 different professions in the United States for their opinions on AI. Not about "the future of work" in the abstract, but about their daily tasks: which parts of their work would they want taken over by an AI agent? And which ones absolutely not?

The researchers combined these preferences with technical feasibility. The result is a concrete and actionable overview: where AI can add value today - and where it's better left at bay.

Note that this is American research. The context differs in points from the Dutch labor market. But the common thread - the need for autonomy, cooperation and meaning in work - is universally recognizable.

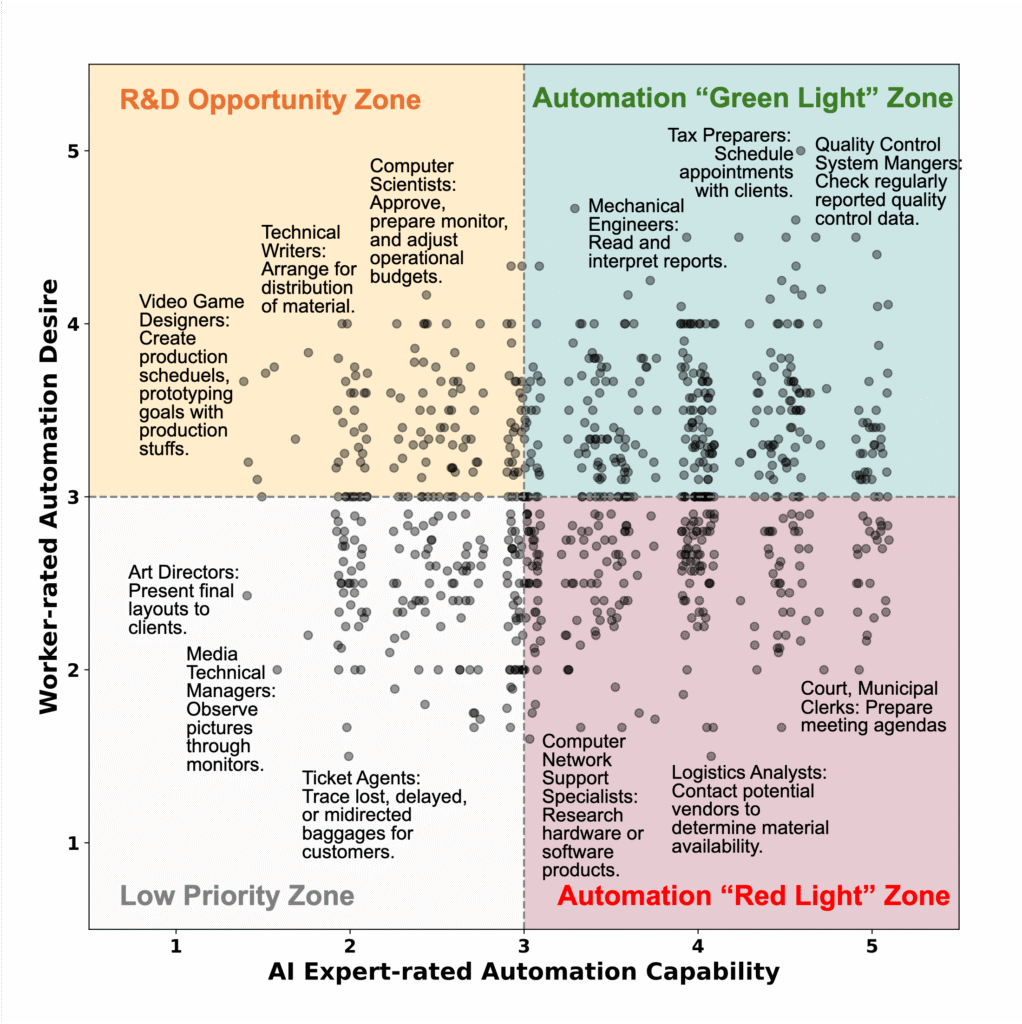

The study categorizes the deployment of AI into four identifiable zones:

This format helps organizations make choices. Not from technology-driven enthusiasm, but from the question: what will make work better?

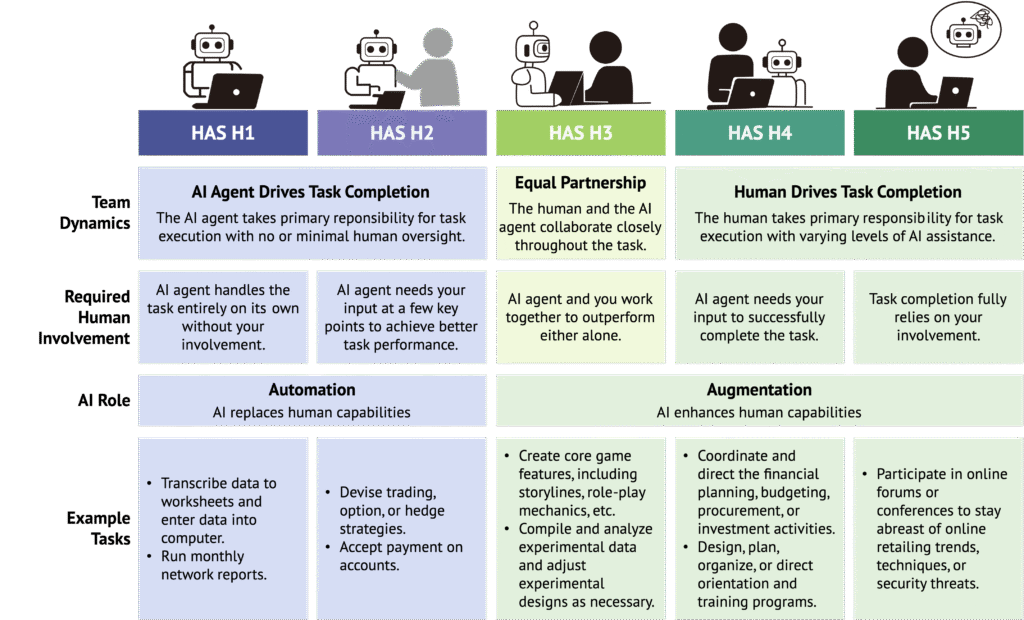

A major innovation in research is the introduction of the Human Agency Scale. This scale measures the extent to which people want to remain in control of their work, ranging from fully autonomous AI action to full human direction.

What stands out:

The lesson is clear: AI must move with human preferences - not the other way around.

For many companies, the focus is still on technological feasibility: what can AI do? But this study shows that an equally important question is: what do people want AI to do?

This requires a fundamentally different approach to AI implementation:

In European organizations, where work culture is strongly intertwined with meaning and autonomy, this approach is not a luxury but a necessity.

What this study makes especially clear: the success of AI lies not in the technology itself, but in how well we manage to use that technology meaningfully. It is a call for organizations to think not only in terms of automation, but in terms of collaboration. Not, "What can AI take from us?" but, "What do we want AI to enhance?"

At Augmentic, we connect directly to that. Our vision of Agentic AI doesn't start from the machine, but from the human. We build agents that are not separate tools, but functional colleagues: with a goal, a set of tasks, and a place in the work process. Agents who work transparently, make measurable contributions, and at the same time give space to what people do not want to lose: their creativity, their judgment, their autonomy.

In our platform Augmentic OS we give organizations the tools to make that collaboration concrete. To redistribute work - not to write people out, but to bring them back to the core of what they do. That, in our view, is the real promise of AI: a workday that is designed smarter, so it feels more human.

📚 Source

Shao, Zope, Jiang, et al. (2025). Future of Work with AI Agents: Auditing Automation and Augmentation Potential across the U.S. Workforce. Stanford University. https://arxiv.org/pdf/2506.06576